Proposal: Preparation of the CSOs and public healthcare sector to address gender and racial biases that might arise from the wide usage of AI in order to protect and promote fundamental rights

Implementation: 2025 to 2027

Call: CERV-2024-CHAR-LITI – Promote civil society organizations’ awareness of, capacity building and implementation of the EU Charter of Fundamental Rights

Proposed Budget: 1 061 761,00€

Keywords: gender and racial biases, biomedical AI, EU Charter of Fundamental Rights

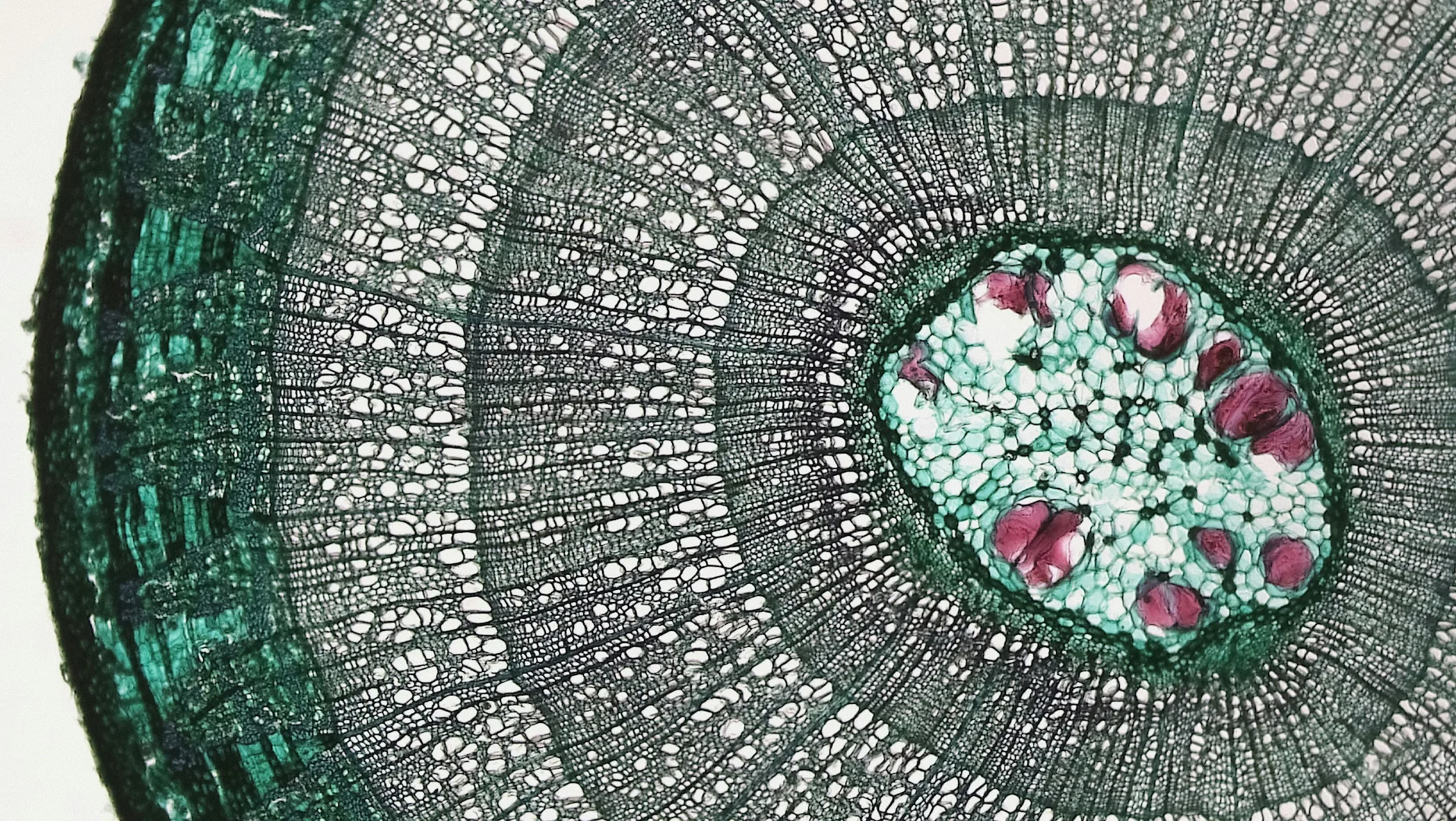

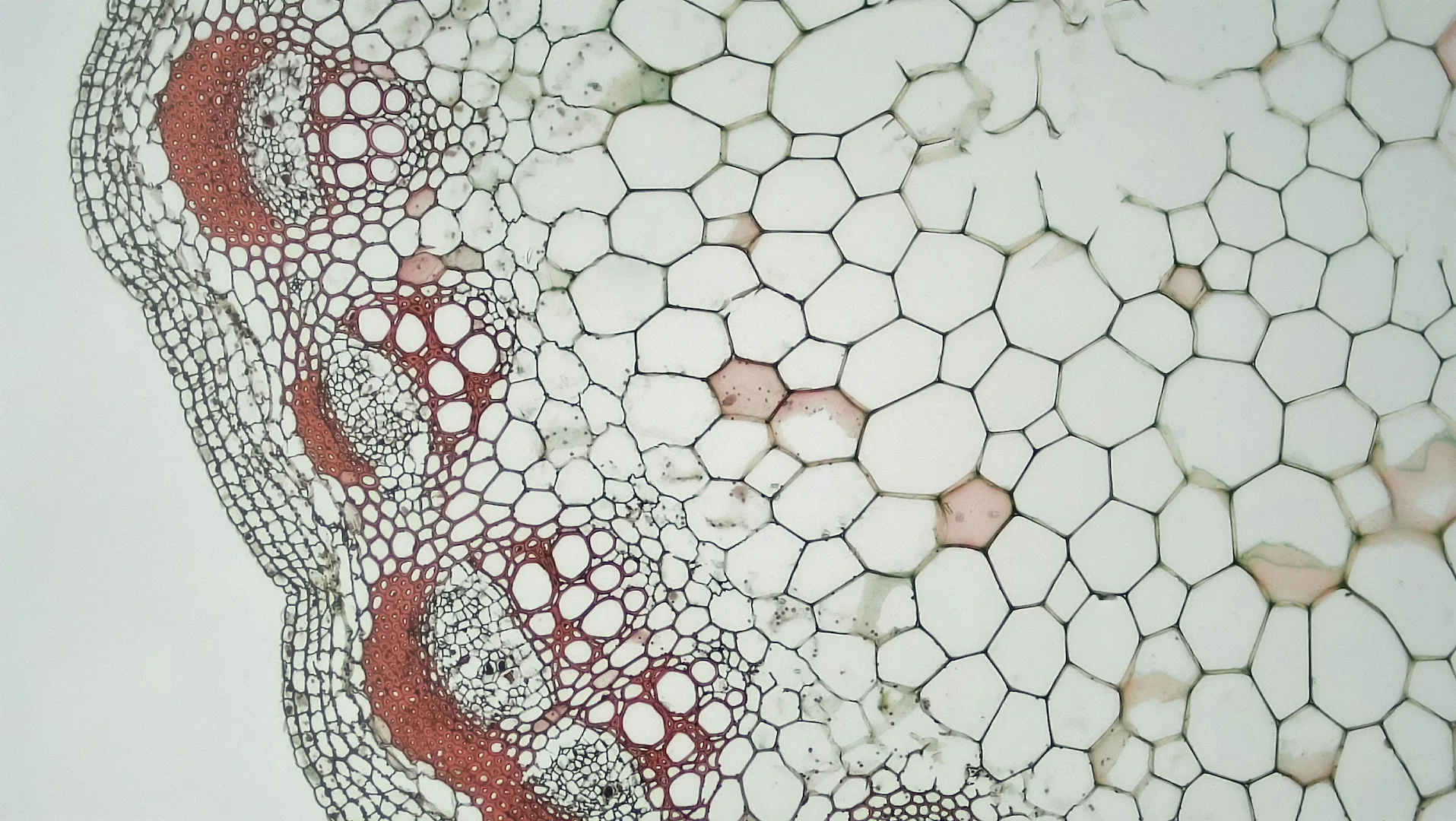

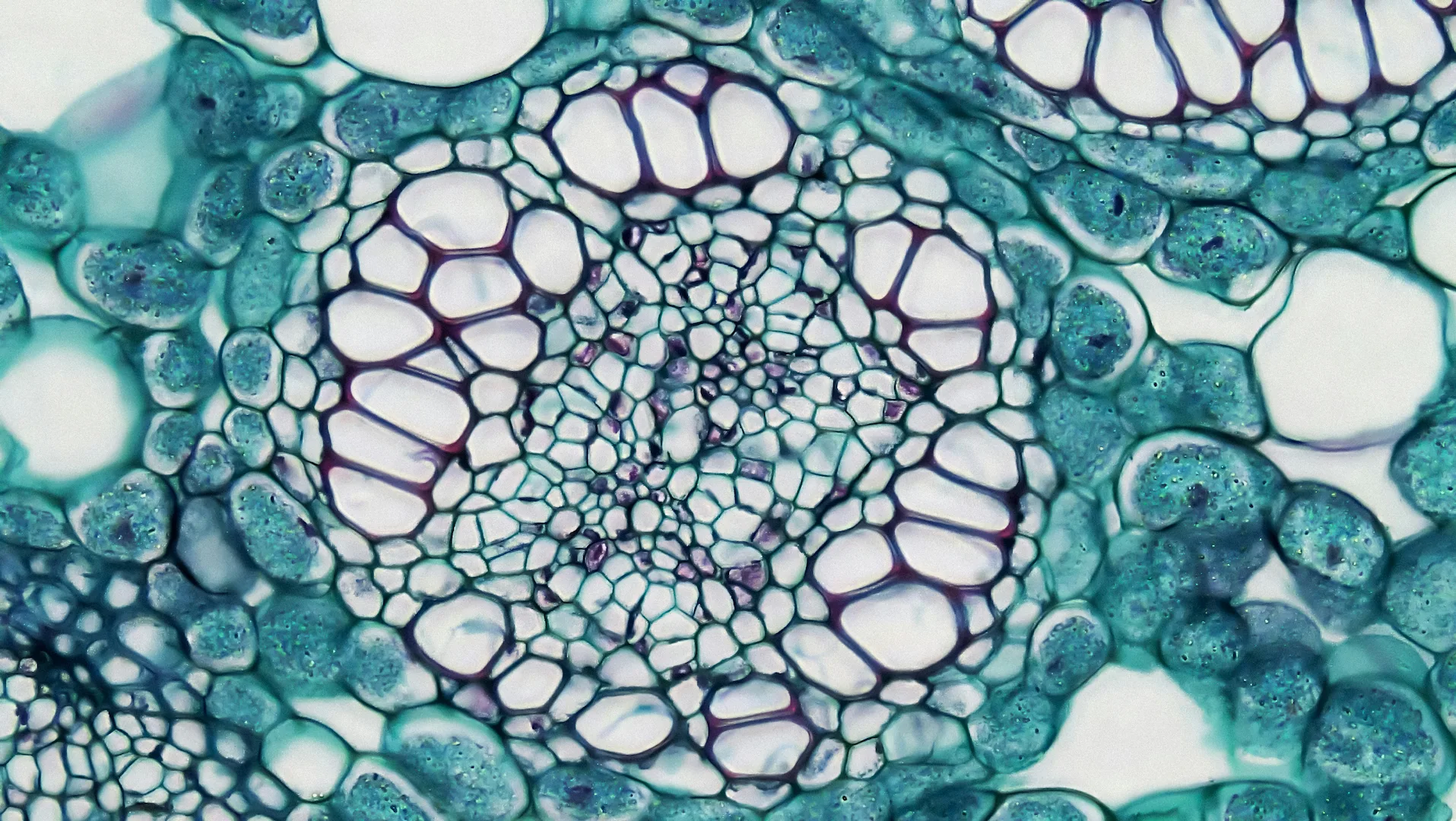

Objective: The topic of the AEQUITAS project is the gender and racial biases that have been reported in biomedical AI and which can lead to misdiagnosis and mistreatment and how they pose a threat to the fundamental rights protected by the EU Charter. The aim of the project is 3-fold:

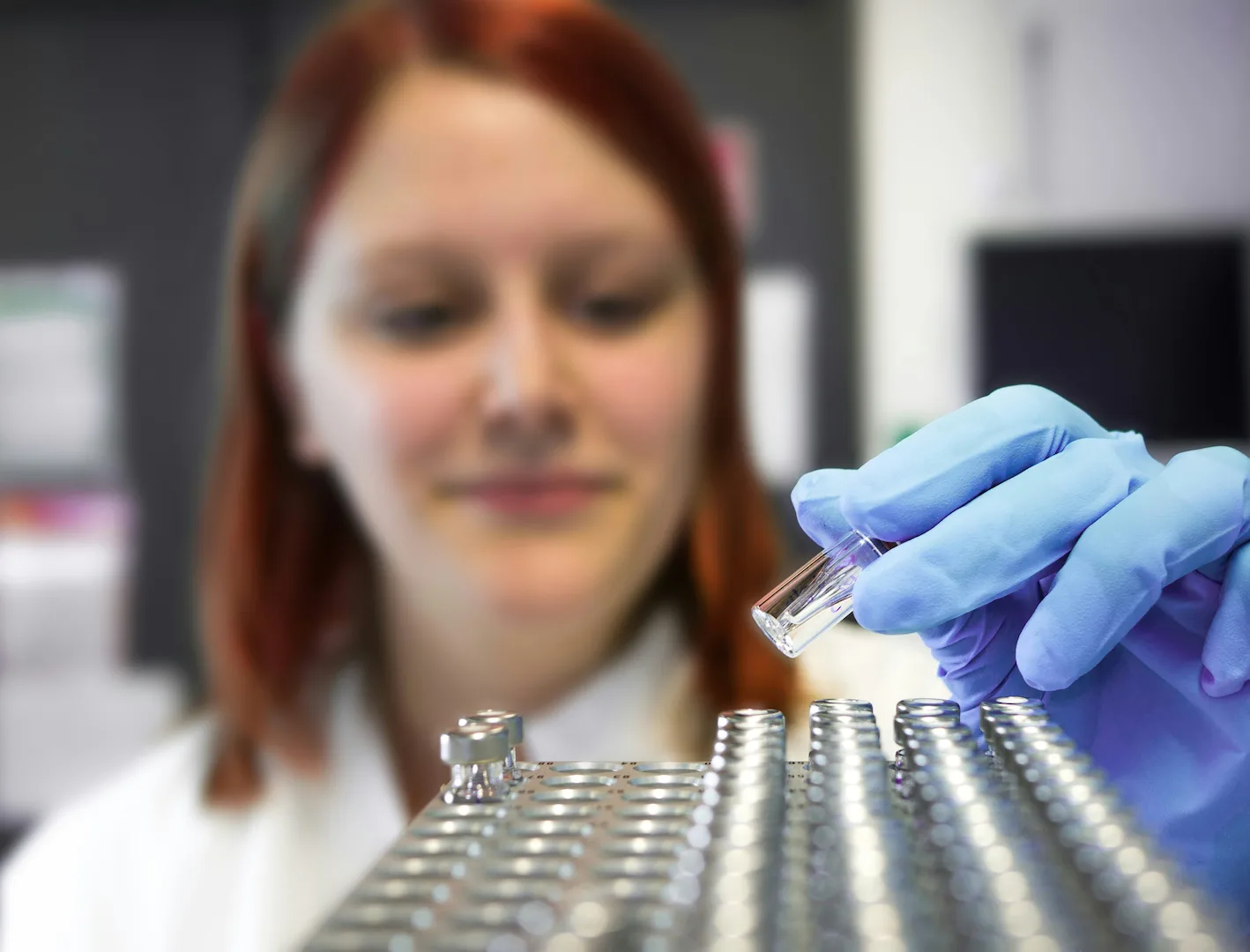

– to increase the capacity of the CSOs and human rights organizations in educating the public, monitoring the biases and advocating for the protection of the fundamental rights especially regarding the biomedical AI;

– to increase the knowledge of healthcare staff from public hospitals on the biases that biomedical AI can present due to the biased data from which they were fed and to help them approach and consult these AI systems with a critical mindset;

– to develop an AI Regulatory Model that will be used by CSOs and public hospitals in their practices;

– to develop a European network of CSOs and public hospitals that will collaborate and support each other in the effort to raise public’s awareness on the gender and racial biases of biomedical AI and on the applications of the EU Charter;

– to raise awareness of the EU Charter of fundamental rights and its application in the AI era;

– to develop and distribute policy recommendations in order to advocate for the need to regulate biomedical AI.

Partners:

- Innovation Hive – Kypseli Kainotomias

- Kentro Ginaikeion Meleton Kai Ereyvnon Astiki Mi K

- Universitat Zu Koln

- Center for the Study of Democracy

- C.I.P. Citizens in Power

- Moterų Informacijos Centras Asociacija Mic

- Lobby Europeo de Mujeres en Espana LEM España

- TIA Formazione Internazionale Associazione APS

- Health Citizens – European Institute

- Technologiko Panepistimio Kyprou

- Cyens Centre of Excellence

- Edex – Educational Excellence Corporation Limited

- Rite Research Institute for Technological Evolution

- Vucable

- TotalEU Production

Project Website: under development